Summary

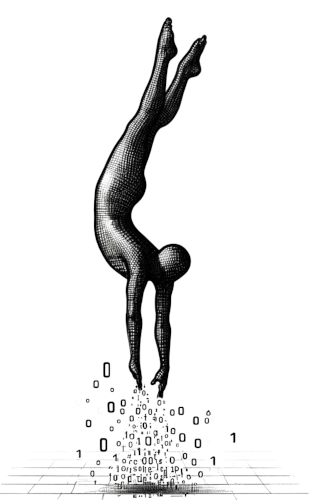

Despite great progress in the field of Natural Language Processing (NLP), the field is still struggling to ensure the robustness and fairness of models. So far, NLP has prioritized data size over data quality. Yet there is growing evidence suggesting that the diversity of data, a key dimension of data quality, is crucial for fair and robust NLP models. Many researchers are therefore trying to create more diverse datasets, but there is no clear path for them to follow. Even the fundamental question “How can we measure the diversity of a dataset? ” is currently wide open. We still lack the tools and theoretical insights to understand, improve, and leverage data diversity in NLP.

DataDivers will 1) develop a framework to measure data diversity in NLP datasets; 2) investigate how data diversity impacts NLP model behavior; and 3) develop novel approaches that harness data diversity for fairer and more robust NLP models.

👥 Team

Cantao Su (PhD student), Menan Velayuthan (PhD student), Anna Wegmann (postdoc), Esther Ploeger (postdoc).

✨ Output

Publications:

- Nguyen and Ploeger We Need to Measure Data Diversity in NLP - Better and Broader, EMNLP 2025.

- Du et al. Disentangling the Roles of Representation and Selection in Data Pruning. ACL 2025.

Activities: Our team is participating in the 2nd UniDive Training School in Yerevan (2026)! We presented a poster at the Workshop on Diversity in Large Speech and Language Models (Berlin, 2025).

🤝 Contact

PI: Dong Nguyen.

This project is embedded into the NLP & Society Lab (headed by dr. Nguyen), part of

the Natural Language Processing group

at the Department of Information and Computing Sciences

at

Utrecht University

🏛️ Funding

This 5-year project (2025-2029) is funded with an ERC Starting Grant.